Design for the ideal text editor

Update: There is now a RFC for Swiboe defining this design in more detail. Comments welcome.

Last time I discussed my thoughts on the ideal text editor. I also mentioned that nothing quite fits the bill for me right now. Today, I want to discuss a possible design for an editor that I think allows for all the things that I look for and that is future proof.

I put a lot of thought into this lately - my frustration with Vim's shortcoming's in daily life and my experiences in writing one of the more successfull Vim plugins out there have given me some insights which I tried to distill in this design. I am currently exploring these ideas in code in Swiboe - my attempt at implementing the editor of by dreams. Contributions are welcome.

The best editor is no editor at all

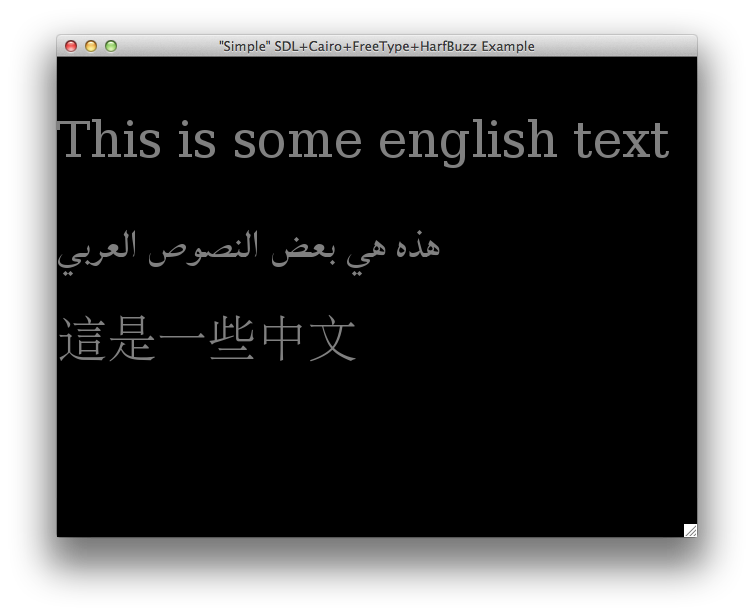

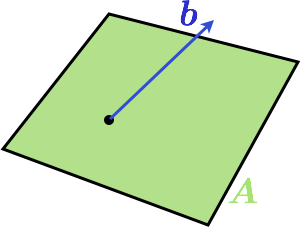

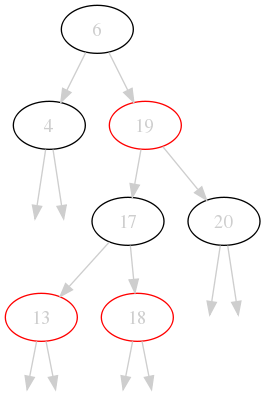

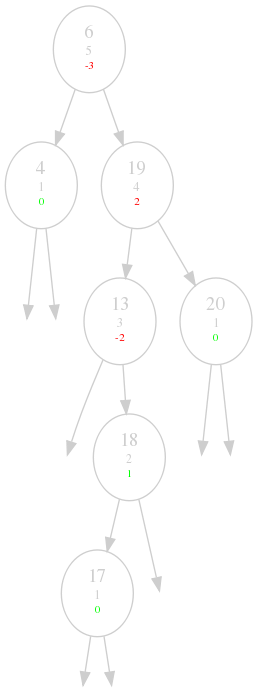

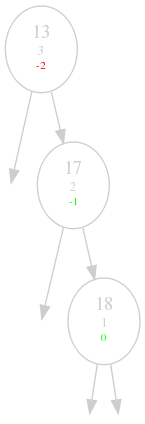

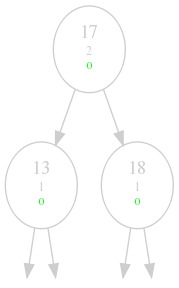

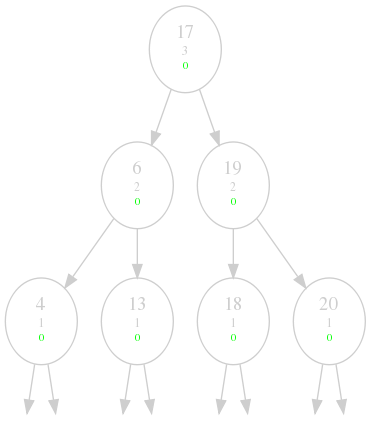

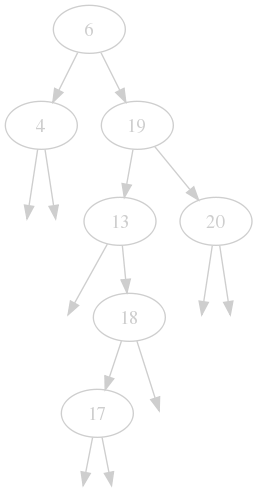

A simple idea got me started: everything is a plugin and a plugin has infinite power. In Swiboe, these are all plugins: the buffer management (editing, creating, loading), the fuzzy searcher, the git integration, the GUI program, the cursor handling in the gui, the plugin loading - every functionality. Swiboe is really just an operator on a switchboard:

That is where its name is coming from: Switch Board Editor.

But how does that work? Let's design two basic plugins that know how to open compressed files into buffers, one that can read Gzip, one that can read Bzip.

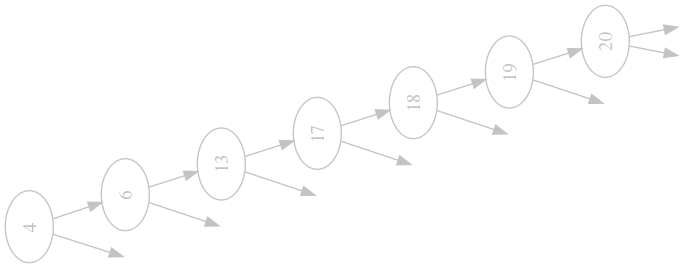

Swiboe listens on a socket and waits for plugins to connect. Once a plugin connects, it registers remote procedure calls (RPCs) which have a name and a priority.

# In gzip.py

plugin = connect_to_swiboe("/var/run/swiboe.socket")

plugin.register_rpc("buffer.open:gzip", priority = 800, buffer_open_gzip)

# In bzip.py

plugin = connect_to_swiboe("/var/run/swiboe.socket")

plugin.register_rpc("buffer.open:bzip", priority = 799, buffer_open_bzip)

We'll define the buffer_open_gzip function later - buffer_open_bzip follows by analogy.

Some other client (for example the GUI) now wants to open a file:

gui = connect_to_swiboe("/var/run/swiboe.socket")

gui.call_rpc("buffer.open", args = {

"uri": "file:///tmp/somefile.txt.gz"

})

The args will be converted to JSON and handed to the called function.

The name of the called RPC defines what will get called: Swiboe will find all registered RPCs that have the same prefix, so in our case both buffer.open:gzip and buffer_open:bzip match. The name of the RPCs are really just a convention: dot.separated.namespaces:name_of_implementing_plugin. This allows callers to call to a very specific function:

# I know that somefile.txt.bz is really a gzip file, so call the gzip

# handler directly. Signal it through 'i_know_what_i_am_doing' that we are

# aware that the extension does not match.

gui.call_rpc("buffer.open:gzip", args = {

"uri": "file:///tmp/somefile.txt.bz",

"i_know_what_i_am_doing": True,

})

It also allows to call a very broad range of functions:

# Call all buffer functions that start with o - that is not very useful

# probably.

gui.call_rpc("buffer.o", args = {

"uri": "file:///tmp/somefile.txt.bz",

})

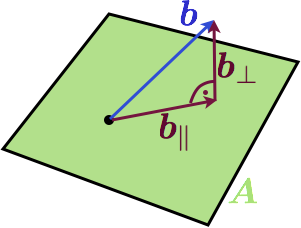

After the functions are determined, Swiboe sorts them by priority and tries them one after the other. The functions work like event handlers in JavaScript or GUIs - they can choose to handle the call and return a value, or they can let the call through to the next. For example, the gzip handler could look like this:

import gzip

def buffer_open_gzip(rpc_context, uri, i_know_what_i_am_doing = False):

if not uri.endswith(".gz") and not i_know_what_i_am_doing:

# Let's inform Swiboe that we do not know what to do with this

# request.

return rpc_context.finish(NOT_HANDLED)

file_path = uri[7:] # Removes file://

content = gzip.open(file_path).read()

# Call an RPC to create a new buffer.

buffer_create_rpc = rpc_context.call_rpc("buffer.create", {

"content": content,

"uri": uri,

})

# Wait for it to succeed or fail and return this value to our caller.

return rpc_context.finish(buffer_create_rpc.wait())

Pro: Events are nothing special

This design can also express something like Vim's autocommands - which are just callbacks, really.

A callback is just an ordinary RPC. For example, when the buffer plugin creates a new buffer, it calls on.buffer.created, but does not wait for this call to finish. Everybody interested in buffer creation can just register a matching RPC - with a I_DO_NOT_CARE priority. This signals Swiboe that all these functions can be called in parallel.

If that is not desired, a priority can be specified to decide when to run - if two plugins conflict they can fix their priority values to make things work.

Pro: Extensibility

A plugin is all-mighty in this design. Want to add functionality to buffer.new:core? Just override it with something that has a higher priority and do something else in it. Want to filter out your .gitignore files from fuzzy find? Just write a fuzzy_find.files:git that is called before fuzzy_find:core, calls it directly and filters its results before passing them on. Want to use tab key for every kind of smart content expansion? No problem, just define the order of functions to call and Swiboe will handle it for you.

Everything is a plugin and therefore everything can be changed by other plugins.

Pro: Simplicity

There is only one concept to understand in Swiboe: this layered RPC system. Everything else is expressed through it.

There is a little more to the story - for example RPCs can also stream results. For example the fuzzy files finder uses this to get partial results to the GUI as fast as possible. But the core philosophy is just this one concept.

The big concern: Performance

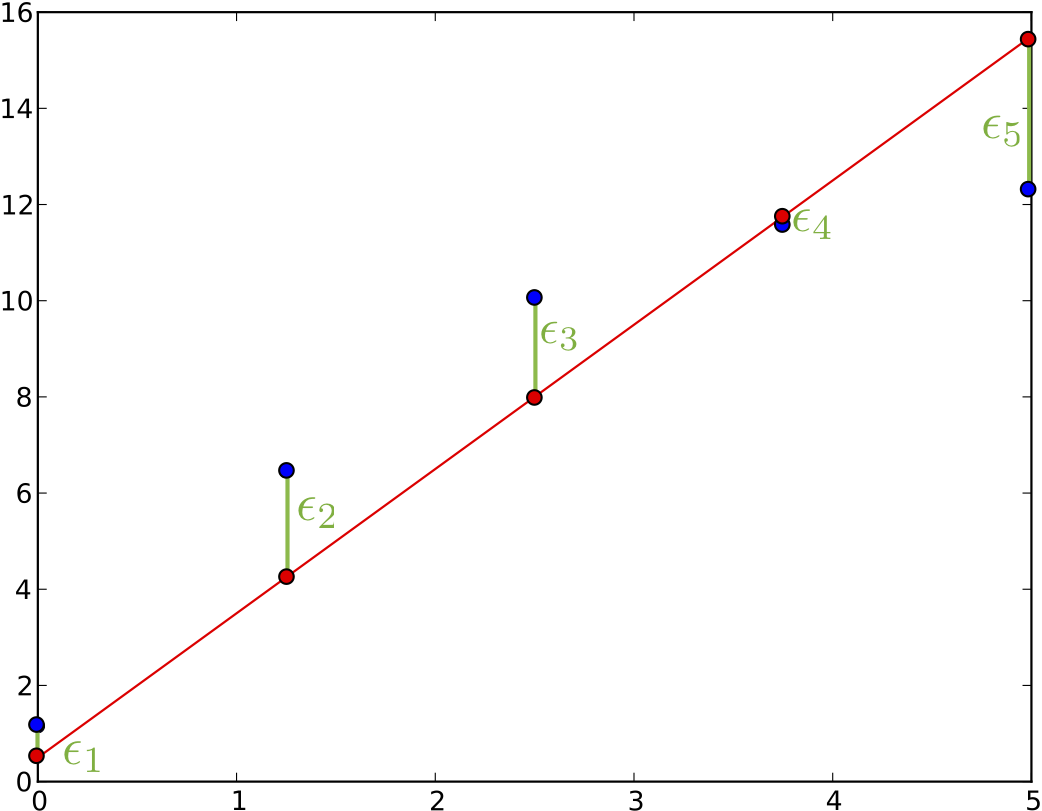

Swiboe is an RPC system using Unix domain sockets right now. A RPC is more overhead than a simple function call. My benchmark is creating a buffer and immediately deleting it - these are 4 round trips:

client (create request) -> Swiboe

Swiboe (create request) -> buffer plugin

buffer plugin (create response) -> Swiboe

Swiboe (create response) -> client

client (delete request) -> Swiboe

Swiboe (delete request) -> buffer plugin

buffer plugin (delete response) -> Swiboe

Swiboe (delete response) -> client

Quick tangent. This displays well why I choose Swiboe as name. It connects plugins until they found the right partner to talk to, enabling communication with discretion and speed.

This flow takes ~290μs on my system - roughly 500 times slower as doing the same with python function calls. However, I did not try to speed it up in any way yet - both the protocol and the encoding are naive right now.

Also, I believe the RPCs are mainly gluing functionality together. Most computation will be run in the plugins, in separate processes, in parallel. I believe that sufficient performance for a fluent and responsive experience can be reached.

And of course, performance is temporary, design is permanent. So let's start with getting this right first.

Disclaimer

There is a lot of dreaming in this post. There is no python client yet for Swiboe. (Update: no longer true). This design is still subject to change and not completely implemented in Swiboe. There is no gzip or bzip plugin.

Also, the exact design and protocol of the layered RPC system is not written down anywhere yet. (Update: no longer true)

Swiboe is open for contributions! If you want to design a cool text editor with me, feel free to reach out.